Addresses appear in nearly all businesses that are not purely virtual. And as with most things with ties to the physical world there are many ways how address of single geographical place can be written.

Customers will often omit certain parts of “official’ address or will use many often non standardized abbreviations, … .

These natural variations together with frequent typos can often lead to huge dataset of unique raw addresses.

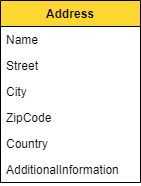

Now imagine yourself as developer with huge (6 000 000) dataset of addresses in some basic structure and with a burning desire to know how many individual places you actually have.

Addresses appear in nearly all businesses that are not purely virtual. And as with most things with ties to the physical world there are many ways how address of single geographical place can be written.

Customers will often omit certain parts of “official’ address or will use many often non standardized abbreviations, … .

These natural variations together with frequent typos can often lead to huge dataset of unique raw addresses.

Now imagine yourself as developer with huge (6 000 000) dataset of addresses in some basic structure and with a burning desire to know how many individual places you actually have.

Even on example of this really simple query we discover, that doing any analysis on our dataset can be nearly impossible in its current form.

This problem can be extrapolated even more when you imagine that you are for example in shipping industry and addresses are critical component of your business. In such case you may want to do much more complex queries (for example in order to optimize your delivery routes, etc.). Your dataset is also constantly growing so you cannot afford to process you addresses again and again.

The solution to the problem outlined above is grouping our addresses into groups where all addresses are written nearly identically. After this processing all your groups should contain addresses of the same place.

So, in technical terms we want to do clustering based on text similarity.

Simple clustering

Our clustering problem has several properties that dictate how our clustering algorithm will look.

- Unknown final number of groups.

- Must handle new data without need for computing everything from scratch.

- Large dataset (6 000 000 addresses) support.

These properties restrict us to clustering algorithms which can cluster data in single pass and do not require prior knowledge of cluster count.

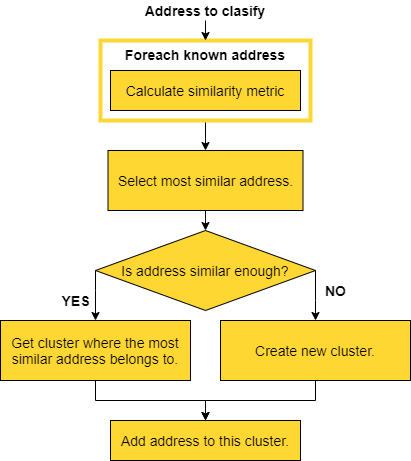

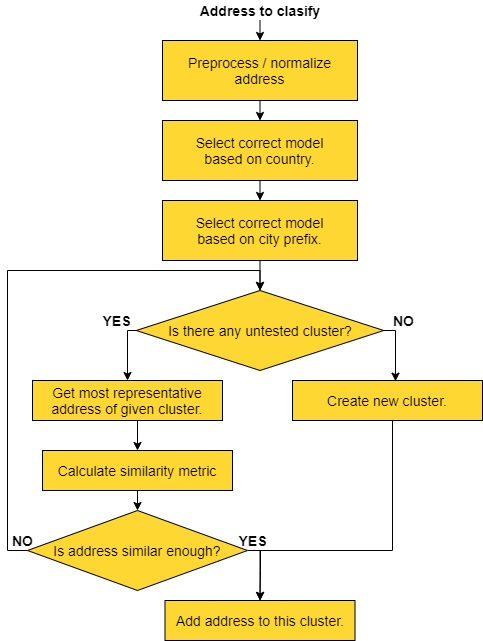

Such Algorithm for address classification could be as simple as:

We can easily tell that such clustering algorithm easily satisfies the first two criteria of suitable clustering algorithm.

Unknown final number of groups

Creation of new clusters is dynamic based on whether address could be added to any existing cluster or whether new cluster should be created.Must handle new data without need for computing everything from scratch.

Entire clustering process is done in single pass. We just clusterize addresses as they come. Due to handling every address just once we sacrifice ability to find even better cluster which could have been formed from newly come addresses.Large dataset (6 000 000 addresses) support.

For determining whether we cloud process our dataset we would need to do some guesstimates.We have \(6\,000\,000\) addresses, so there would be \(\frac{6000\,000^2}{2}\) comparisons.

The division by two is there because we are gradually comparing each address to more and more already existing addresses.

The count of addresses to power of two is the worrying part here. Because even after division by 2 we would still need to perform \(18\,000\,000\,000\,000\) comparisons.Let’s say that comparing one address to another takes (separate comparisons for street field, city field …) takes 0.1ms.

If you don’t have rest of your lifetime to watch progress bar to go by than we will need to do some heavy optimalizations.

Optimalizations

Our problem is the number of comparisons we need to make.

Reducing number of comparisons

Currently we are comparing currently processed address to all known addresses and then selecting the most similar one. If we sacrifice finding the best one and settle for finding first address that is good enough (JaroWingler distance is lower than epsilon) than we can reduce number of comparisons drastically.

This optimalization alone doesn’t solve our problems alone because in the worst scenario (no known address is close enough thus we need to create new cluster) the number of comparisons is the same.

The second optimalization we can make is not to compare address against all known addresses but compare the currently processed address just against most representative addresses from the current clusters than the number of comparisons would go down by a lot.

(This approach is called leader clustering)

If we have on average 5 addresses per single geographical place (average cluster size is 5) than we could guess that total number of comparisons would be 5 times less.

That is great progress, but it still means that we would need to wait more than 11 years.

Reducing number of clusters

There is not a much we could do in further speed optimalizations of the algorithm.

Our only possibility is to somehow reduce number of clusters.

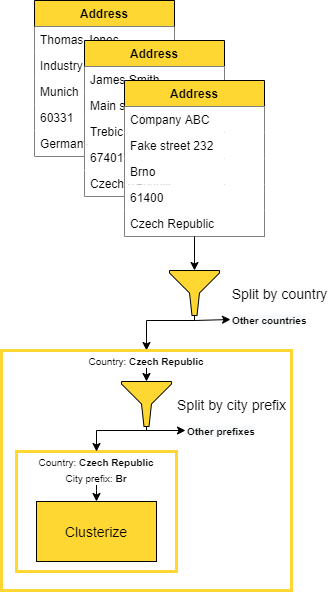

In order to do so we need to split our big dataset into number of smaller ones. On our example with addresses the most common splitting point would be field containing country.

If we can achieve that single country is not written in multiple ways than we can cluster data for each country separately. We have number of ways how to increase chance that all addresses have the country written in single way.

Most **reliable would be to force users to select country from prepared dataset. If this is not possible than we can try to preprocess all addresses and translate provided translate into some ISO country code (Czech Republic / Czechia => CZ).

This way we would create N separate clustering problems which could be handled separately, and the training could even be done parallelly.

Speed estimate + final optimalization

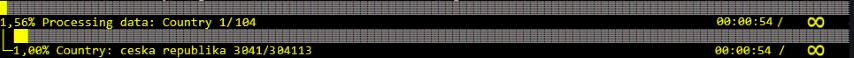

If we estimate that most common country in our dataset has 50% of addresses associated with it than we need to cluster 3 000 000 addresses.

With guess that on average there is 5 addresses in cluster the number of clusters for that country would be 600 000.

Thus, we would need to make \(\frac{600\,000^2}{2}\) comparisons. That is 1% of comparisons needed by the original algorithm.

Unfortunately, this still means that we would need to wait half a year.

Fortunately, the approach with splitting data into smaller clusters can be applied again to our country clusters. For example, we could split each of the countries into number of clusters based on first letter (or two, ..) of city.

This will cause addresses with typos in first letter of city to be misplaced but we can hope than number of errors in the first letter is minimal.

If we estimate that 20% of addresses of given country have single city prefix than the final number of clusters is \(600\,000*0.2=120\,000\) which results in \(7\,200\,000\,000\) comparisons.

This means that we have reduced the complexity to 0.05% of our initial solution. Under these assumptions our dataset can be processed (with sufficient parallelization) in little over 8 days.

The reduction from 57 years to 8 days is awesome but not without downsides.

Now we must ensure that countries are represented in consistent way and our data could not contain errors in first letter of field where city name is stored.

Final solution

To recapitulate, in order to cluster large (growing) dataset of addresses in a way that each cluster represents single geographical place (similarly written addresses) we need to:

- Minimalizing consistency via preprocessing

- fixing capitalization

- trimming whitespaces

- replacing special characters with common alternatives (č => c , ß => ss, …)

...

- Splitting data via country and then first N letters of city

- This allows us to reduce time complexity by several magnitudes

- Finding cluster where representative address of given address is close enough.

- If such address is found (JaroWingler distance is under epsilon) than add currently processed address to this cluster.

- If no suitable cluster is found create new one.

Precision

Precision of this algorithm relies on the chosen parameters such as epsilon (limit where address is considered close enough) and weights of individual fields on address similarity calculation.

For epsilon selection some experimentation is needed, but for weights I would suggest higher weight on address name and street and lower (or completely ignoring) on fields containing additional information (such as note,).

Via selection of epsilon, we can tune ratio of not categorized addresses to wrongly categorized.

For correctly set parameters (with goal of minimalizing wrongly categorized at expense of higher count of not categorized addresses) you should be getting under 0.2% of wrongly categorized and something about 10% uncategorized addresses.